As AI and HPC (High-Performance Computing) applications grow rapidly, data volumes and computing density continue to increase. Whether it’s training large language models, simulating climate change, or processing genetic sequences, these workloads heavily rely on fast and stable data access. If storage systems fail to keep pace with GPUs and algorithms, they will become critical bottlenecks to both performance and cost-efficiency.

Similarly, scenarios such as 4K/8K video editing, VDI, enterprise virtualization, and cloud services also demand higher stability and real-time processing capabilities from storage systems. To address these challenges, All-Flash architectures and iSCSI + RDMA technology are increasingly becoming mainstream choices. However, to fully unlock their potential, the key lies in the continuous optimization of the storage system’s software layer.

The Next Step in Storage Architecture Optimization: The Critical Role of the Software Layer

QNAP has consistently focused on maximizing the potential of underlying resources in the QuTS Hero operating system. The system has incorporated several key performance-enhancing designs and continues to improve it across multiple layers to meet the evolving demands of computing and storage.

However, software performance improvement is an ongoing endeavor, especially as advancements in hardware continually open up new opportunities for deeper optimization. Through system analysis, the QNAP team has identified two key system-level focus areas as entry points for ongoing optimization:

1. Enhancing Performance for Multi-Core and Parallel Computing

With the ongoing increase in processor core counts, systems are unlocking greater capabilities for parallel processing. By introducing a multi-threading model into more work modules and task decoupling mechanisms, continuously analyzing workload characteristics and thread allocation strategies within the processing flow, and optimizing thread affinity along with parallel processing mechanisms, the multi-core performance can be more fully realized in practical applications.

2. Continuous Improvement of Memory and I/O Subsystem Efficiency

Although processor performance has rapidly improved, the design of I/O channels still plays a crucial role in tuning I/O efficiency. By continuously analyzing bottlenecks in data access paths and implementing necessary design improvements, transmission performance can be further enhanced, ensuring the overall system operates cohesively to achieve maximum efficiency.

Building Efficient Data Channels: The Integration of iSCSI and ZFS in Practice

In response to the aforementioned bottlenecks, the QNAP team conducted in-depth optimizations on the core data channels. We adopt an integrated design approach from the network stack, through the iSCSI transport layer, to the backend file system (with ZFS as the core), aiming to streamline every stage of the data flow and achieve truly high-speed transmission.

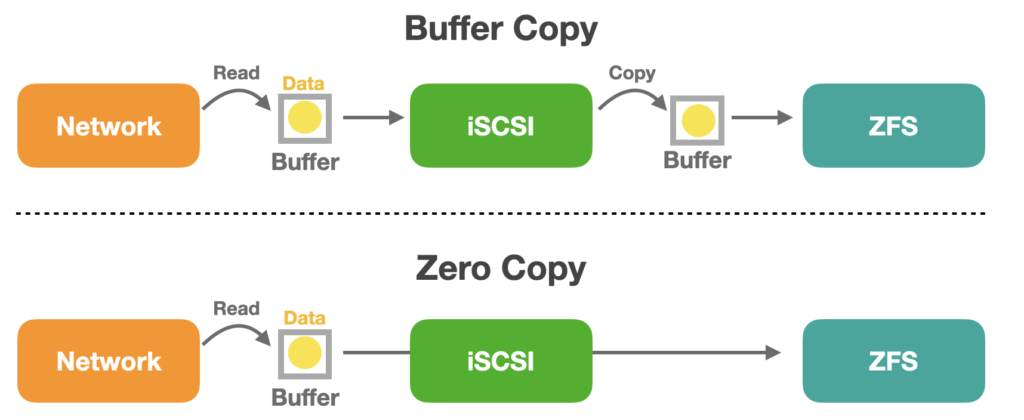

Introducing Zero-Copy: Reducing Data Migration and Unleashing Performance

In modern system architectures, software integration between the communication protocol layer and the file system layer is key to achieving efficient data exchange and storage. QuTS Hero enables Zero-Copy data transfer from the network stack to the iSCSI layer and down to the file system layer, avoiding multiple data copies between core modules during transmission. This significantly reduces CPU overhead and enhances data transfer efficiency.

This not only reduces memory bandwidth consumption but also lowers latency, making it especially beneficial for high-frequency I/O workloads such as AI, HPC, and virtualization platforms. Such an integrated design transforms iSCSI from a traditional storage protocol into a vital component of a high-performance data path.

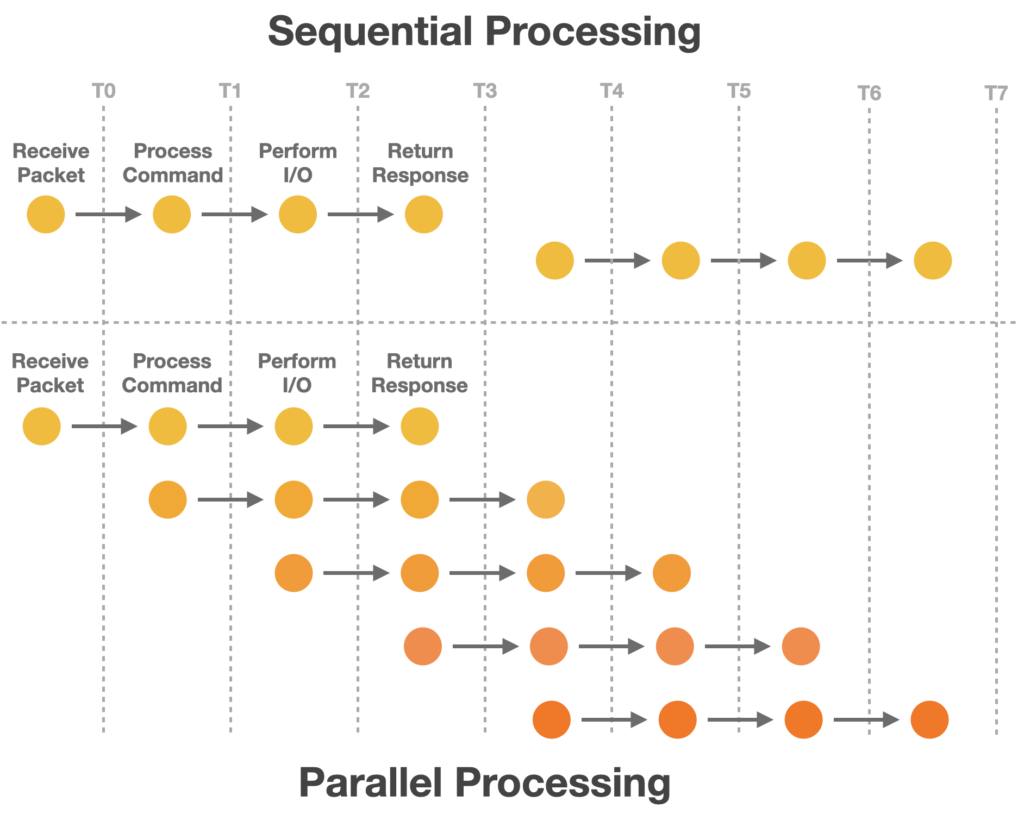

Parallel Decoupling: Reshaping the iSCSI Data Processing Workflow

Traditionally, the iSCSI module handles packet reception, command parsing, data migration, and response transmission sequentially. When commands and data are processed in a linear fashion, it can easily lead to processing bottlenecks. To enhance its responsiveness in real-world applications, the architecture was redesigned with task decoupling and parallelization.

We separate the scheduling of data processing and command parsing to run in parallel, thereby reducing mutual blocking between processing stages. At the same time, introducing the concept of Lock Splitting helps avoid resource contention caused by global locks, further reducing synchronization overhead and data migration costs in parallel processing, ultimately enabling high-performance iSCSI services.

Cross-Layer Collaborative Scheduling: iSCSI and ZFS Performance Integration

In modern storage systems, performance synergy between the iSCSI transport module and the ZFS file system is key to overall I/O performance. Through coordinated thread scheduling strategies, both can operate independently without interference under high concurrency scenarios, further improving multi-core resource utilization efficiency and the smoothness of data processing.

Conclusion: Software Practices to Unlock Hardware Potential

After optimization, we observed significant performance improvements across multiple simulated test scenarios, especially under random I/O loads, with notable enhancements in overall system responsiveness and processing efficiency. In some test cases, we even observed up to approximately 50% performance improvements, demonstrating the clear effectiveness of system optimizations in specific application scenarios.

These results confirm that QNAP’s coordinated optimizations in key modules such as iSCSI, ZFS, and scheduling logic effectively unlock the system’s potential for multi-core processing and high-performance storage architectures. This enhances the overall efficiency of data transmission and storage pathways, providing a stable and reliable technical foundation and performance for high-density computing and virtualization applications. It also demonstrates QNAP’s technical commitment to continuously optimizing the product experience and improving its capability to support critical applications.

In the future, we will continue to invest in architectural enhancements and performance optimizations to provide a stable, predictable, and scalable storage platform for various usage scenarios with different scales and load types.